ChainRxn

Description as a Tweet:

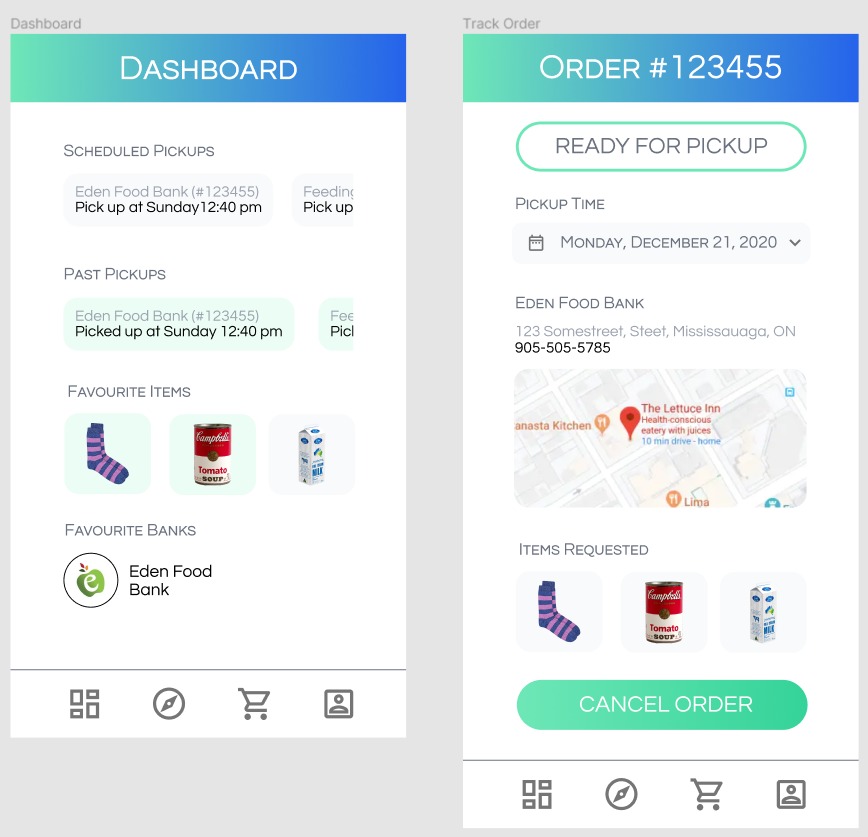

ChainRxn is a mobile and web app developed using React JS, GraphQL, and Figma, which offers a contactless experience for people who use, run, and give to food banks. It allows you to request items to pick up, donate, and restock at local donation banks all from one platform.

Inspiration:

After conducting research online, we found that many food banks and donation centers have systems that are still not automated and inefficient. We also discovered the success of these banks are especially low right now due to physical distancing. We wanted to change that by offering a seamless app where everyone who is involved with a community food bank can contribute to or benefit from the facility as virtually as possible.

What it does:

Our project is still in development; we have completed the back-end, planned the front-end graphically on Figma, trained a custom Google Cloud Open Machine-Learning model on different types of donations (for our restocking functionality), and programmed most of the front-end using React JS and GraphQL. Once complete, our project will allow donors to select items to drop-off per a scheduling system; food bank admin to view Intelligent AI graphs projecting their bank's needs and restock large donations through a camera-based multiple-image recognition software; and those in need of items from the banks to schedule pick-ups for pre-selected items. All these users will have the ability to explore donation centers near them.

Our Figma plan outlines the entire project: https://www.figma.com/file/VhLZWJf81fFHIOsYRguEyy/Banks-Sorted?node-id=2%3A6.

How we built it:

We designed the app on Figma. Then we used MongoDB, GraphQL, React, and Node.js to implement the design. We also tried to use Google Cloud Vision for object detection.

Technologies we used:

- HTML/CSS

- Javascript

- Node.js

- React

- AI/Machine Learning

Challenges we ran into:

We didn't know how to create a custom machine learning model for food bank items. Also, when we found a dataset that would serve our needs we had trouble uploading it correctly to Google Cloud Storage to use with Google Vision's training algorithm. Lastly, we didn't write code that was modular enough; with three different types of users, more modularity would have helped us save time.

Accomplishments we're proud of:

We are proud of exploring a variety of technologies we hadn't explored before through this project. This includes Radar.io and Google Cloud's Open ML, Vision AI, and TensorFlow image classification and object detection techniques.

What we've learned:

We learned the importance of proper project management while building this. We realized that an amazing, impactful idea must be supported with a thorough plan and schedule. We also learned when to switch from troubleshooting to a new strategy all together, in order to save time.

What's next:

We hope to develop our project to the level we had originally envisioned. We plan on completing the integrations of our back-end into our front-end in React, and successfully deploy our trained ML model within our app.

Built with:

We used Figma, MongoDB, GraphQL, React JS, Google Cloud Open ML, Node.js, and Javascript.

Prizes we're going for:

- Best Web Hack

- Best Mobile Hack

Team Members

Suhana Nadeem